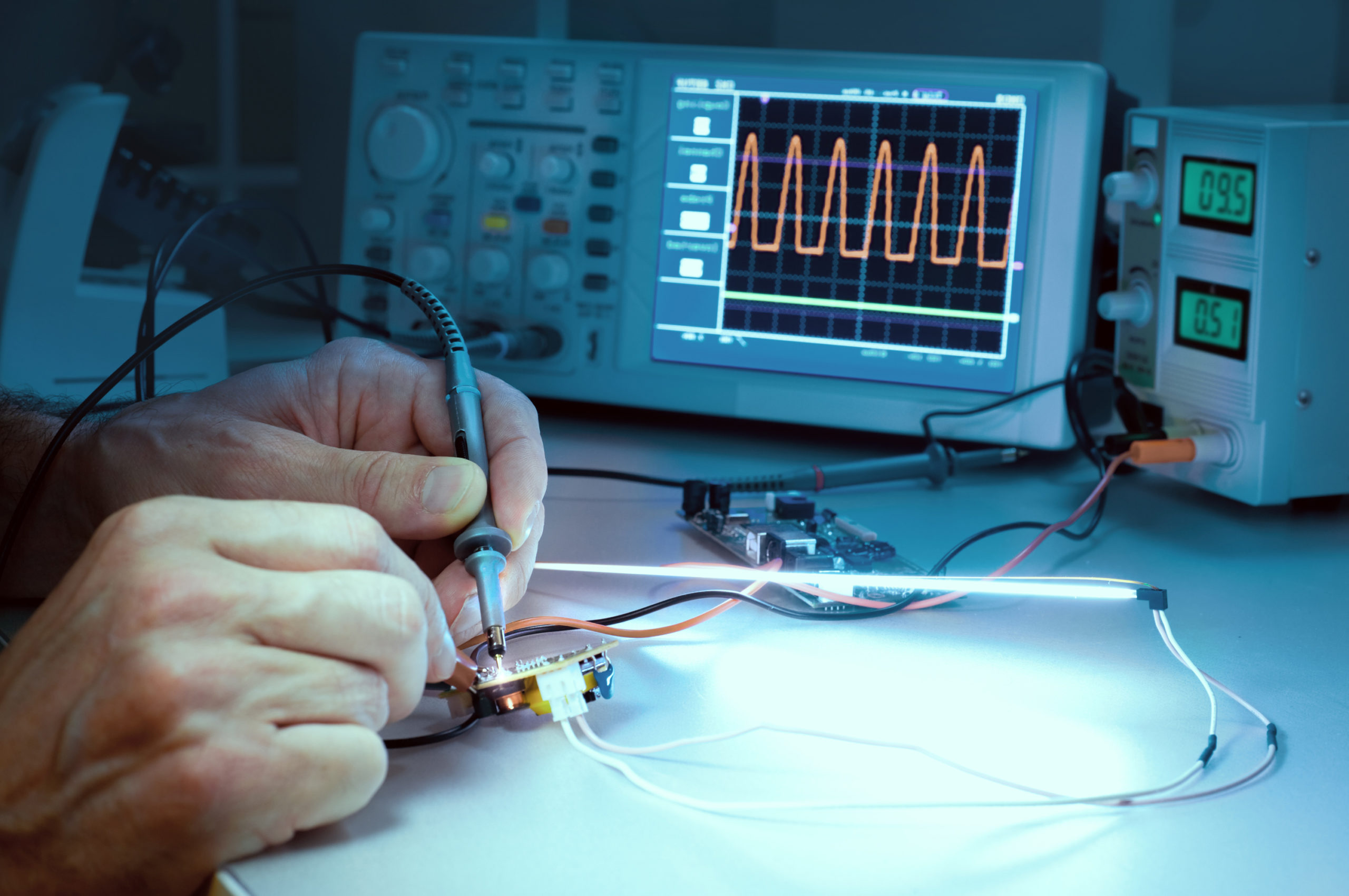

Developing successful test systems and procedures is a critical component of product design. Good test system design leads to designs that meet requirements, reliable schedules, and smooth production ramps. Including test system development as part of the design flow from the beginning of a project creates an avenue to drive cross functional collaboration and facilitates early learnings, accelerating product delivery and derisking the project, especially for complex designs. Test early, and test often to maximize learnings at a time in the project where there is flexibility to rework or change direction without compromising the project. Creating and testing test beds for new functional blocks and testing initial prototypes as thoroughly as possible greatly improve the success of a product.

Test system design is an often underestimated task. Testing usually happens later in the product development process, so this planning and development is often delayed, underestimated, or is simply an afterthought. If the product is designed well, it’s going to work well, right? Not necessarily. Test engineers live by the motto: if it’s not tested, it’s broken. There are always surprises and learnings in every design. In order to learn the right lessons, design parameters and functionality must be well tested. Drawing the correct conclusions about product readiness requires accurate testing. And accurate testing requires careful preparation in advance of when initial prototypes and testbeds arrive. What’s the first thing to be scaled back when trying to cost reduce or speed up delivery of a product? You guessed it, testing. Good test system and procedure development practices are fundamental to designing a successful product that meets requirements, avoids schedule delays, and doesn’t have failures during development or in the field. Avoid the common pitfall of scaling back and delaying test.

Steps of Successful Test System Design

Step 1: Define the Requirements

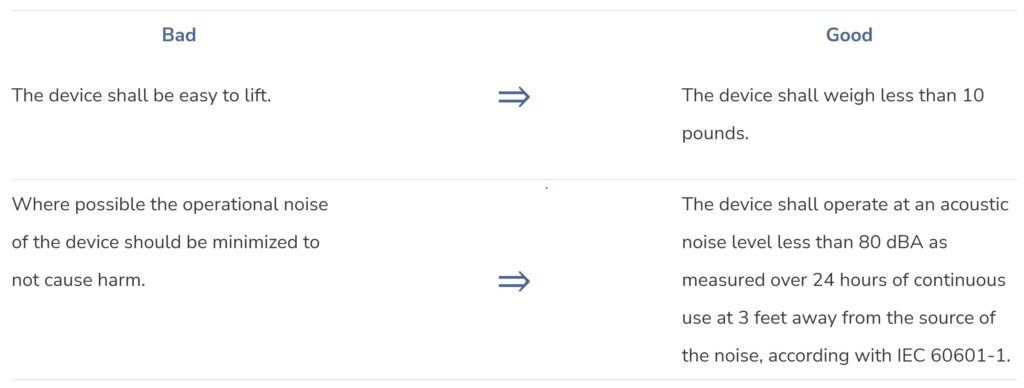

The first step to successful test system development (and product design) is to carefully define the requirements. Remember: if it’s not worthy of being tested, it’s not a requirement. Start from top level product requirements, including behaviors, develop a product architecture that turns them into parts, components and subsystems (“subsystems” for short going forward) that implement those requirements and behaviors. Meticulously define the interactions and dependencies between subsystems. For a more detailed guide on developing requirements, read the Developing a Product Requirements Document whitepaper.

Then convert these requirements, behaviors and interactions into objectively measurable performance requirements for each subsystem, including pass/fail limits for the measurements. Once an exhaustive set of requirements has been generated, design the tests that can evaluate each one. While some requirements will need to be explicitly measured, some requirements can be verified by inspection (“product must have a power switch”), and others can be inferred from system behavior. For example, serial communications transaction parameters between subsystems may not need to be measured if there are good justifications to suspect it will work across all expected conditions, and a go/no go metric like no dropped transactions can be used instead.

Generating good test limits is essential to making a successful product. If the limits are too tight, it will be hard to produce enough units at a reasonable cost. If the limits are too loose, performance can no longer be guaranteed. Limits start with design expectations, are refined through product characterization, and are tightened to account for repeatability, reproducibility, environmental conditions, and lifetime drift expectations of both the product and test system. Be careful with inferred or guaranteed by design metrics, and make sure that there is a solid justification for these determinations, and that the assumptions made will not change over the lifetime or the conditions in which the product will be used.

Step 2: Define the Measurement Processes and Equipment

The second step to successful test system development is to define the measurement processes and equipment needed. For each requirement that needs explicit testing, determine how to measure the parameters accurately, without altering them with the measurement system. This determination can be made by assessing each subsystem requirement, determining what kind of loading (from the measurement equipment) can be applied without significantly altering the measured parameter, and comparing this with the measurement system specs. For example, the input impedance of a digital multimeter doing a voltage measurement should be high enough to not significantly alter the amount of current flowing through an electrical node during a measurement. The accuracy of the measurement equipment should be compared to the pass/fail limits, taking into account parameters like measurement noise and repeatability. An often overlooked requirement of test systems is that tests should fail if a unit under test is not connected. Another important consideration is cost and availability of the measurement equipment needed. It is most likely impractical to require production testing at an RF lab in order to make sure each unit passes EMI testing. Likewise, it may not make sense to require a very costly piece of test equipment to make some measurements. Determine if it is possible for the unit to test itself as much as possible.

Step 3: Determine the Level of Accuracy Needed for Testing

In order to scale the test system to production levels, all non-idealities must be statistically added up in order to make a determination if the measurement system is accurate enough. Accuracy requirements are determined based on the product level definition of acceptable tolerance of test escapes (units that don’t meet spec getting into the field). A standard test engineering practice is to use an initial set of units and test systems to generate repeatability and reproducibility data. Repeatability is a measure of the statistical distribution of results when a measurement is set up once and repeated over and over. Reproducibility is measure of the statistical distribution of results when the measurement system is set up repeatedly, ideally including different measurement machines and operators. For each of these sets of tests, a “golden” unit should be used such that the desired result is known.

Step 4: Provide Dashboards for Sharing Testing Results

Test data is not useful if it cannot be accessed and understood by interested parties. It is a standard practice to use a dashboard system that allows evaluation of the state of the product without requiring a large amount of specific knowledge to understand the results. Result summaries are a great way to communicate test data, and a way to choose which summaries are relevant to a user. Perhaps the engineering lead doesn’t want to know the details of specific tests, but rather which subsystems or functions are failing. The best dashboards can easily export data to be put into presentations for executive summaries or non-technical personnel to understand.

Step 5: Develop a Plan for Life Testing if Required

If performance needs to be guaranteed over lifetime of a product, procedures should be put in place to ensure this. This can come in the form of periodic field calibration, accelerated lifetime testing, burn-in before final test, and stress testing. Burn-in tests generally come in the form of running the product through a tight loop of functional tests repeatedly for a period of time before putting the unit through final test. Some examples of accelerated lifetime testing would be running under “stress” levels of voltage, temperature, humidity, vibration, timing, etc. for a period of time. “Stress” levels can be determined by design engineers, or by finding where units fail.

Conclusion

Good test system design leads to predictable, consistent manufacturing and happy customers. Careful planning and good test procedure development allows products to get to market quickly while reducing risks of failures over lifetime. Testing early and often allows the team’s resources to be allocated in an optimized way based on data. Accessible dashboards allow executives to make good decisions and are a great communication tool for the entire team to understand the state of the project. Solid testing is the bedrock of product design, and ultimately produces reliable products that customers love.