In the first part of this blog series, we explore a history lesson in optimal product development by looking at the first personal computer and considering what it takes to design and develop a breakthrough product. After that exhilarating history lesson it is time to dive a little deeper into the engineering disciplines and trade-offs to consider when developing a new product.

INTERRELATED ENGINEERING DISCIPLINES: UNDERSTANDING THE TRADE-OFFS

Now that we have a general idea of how to affirm “what” the product will be, the next part is crucial:

Understanding that trade-offs must be made to establish the division of responsibility for performing system functions between the software/firmware, electrical, and mechanical components.

The task of making these important choices up-front usually falls on a systems engineer, with support from other engineers as needed. The output, the so-called product architecture, will be a roadmap for the entire development effort, for better or worse.

Frequently, engineers set goals of making products that push the boundaries of performance in several directions. Without a clear understanding of the trade-offs required, the product development effort can get bogged down, leading to disastrous results. Rather that pushing all the boundaries, note that there is often a market opportunity for a product that just focuses on being smaller or faster or easier to use. DEC struggled to compete with large computer systems dominated by IBM until it decided to create the smallest, cheapest, most user-friendly machines possible, starting with the PDP-1.

How then can we arrange mechanical, electrical, and firmware components to give us a reasonable chance to produce an elegant, simple product? We consider a hierarchy, with mechanical elements at the bottom, electrical next, and firmware at the top. The three rules of system decomposition guide us to push complexity upwards from mechanical pieces to firmware.

The First Rule of System Decomposition states that, if possible, push the complexity into the microprocessor’s firmware. The cost of a microprocessor to do a task will only continue to decrease. The on-chip storage capability for firmware also continues to grow, and manipulating firmware is the easiest system way to modify or add functionality. In contrast, the costs of many mechanical parts, and certainly electro-mechanical assemblies (motors, solenoids) are already at rock-bottom prices, and unlikely to go much lower.

The Second Rule of System Decomposition states that, if possible, push functionality into electronics, once the First Rule is applied. Electronics (besides microprocessors) continue to decrease in cost. This is especially true of the analog-meets-digital A/D and D/A converters that are most often right on the microcontroller chip itself. Interfacing to the real world via analog voltages and currents has become fairly easy and inexpensive. Yes, high-speed and high-precision applications will still require more expensive components, but as a rule, any physical value or process that can be represented by a voltage or current can be easily handled by today’s electronics.

Another basic building block that continues to increase in capacity and decrease in cost is memory. The PDP-1 had 9,216 bytes of memory2. Not Kbytes. Not Mbytes. Not Gbytes. A modern smart phone’s computer has 4GB and it fits in your pocket with a battery that lasts all day. Today, a chip that has all of the PDP-1’s logic and memory would be less than 0.1 mm x 0.1 mm in size.

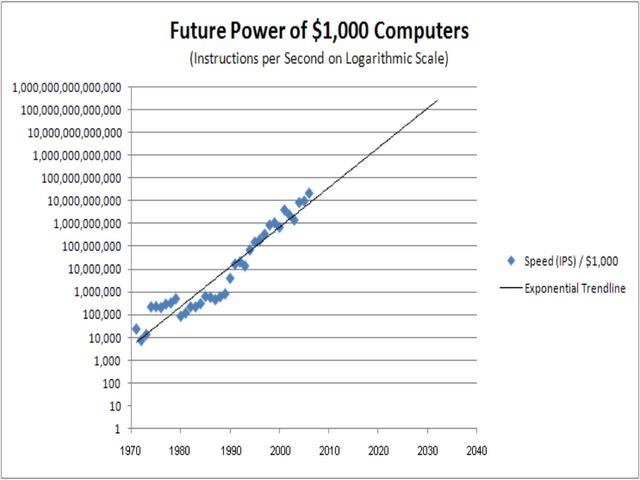

There has never been such a rapid increase in performance and simultaneous decrease in cost of anything else besides computation. Yes, it is Moore’s Law but also better architectures and better software.

This graph shows what a constant $1,000 will buy in computation. If it had gone back to 1960, the pdp-1 ran at 200,000 instructions per second and cost $120,000 (1960 dollars). The consequence of this trend is (and this is very important): The cost of computation for a fixed task is approaching $0.

Lest it seem like this is hyperbole, last year Jay Carlson wrote about the 3-cent microcontroller:

(Source: https://jaycarlson.net/2019/09/06/whats-up-with-these-3-cent-microcontrollers/)

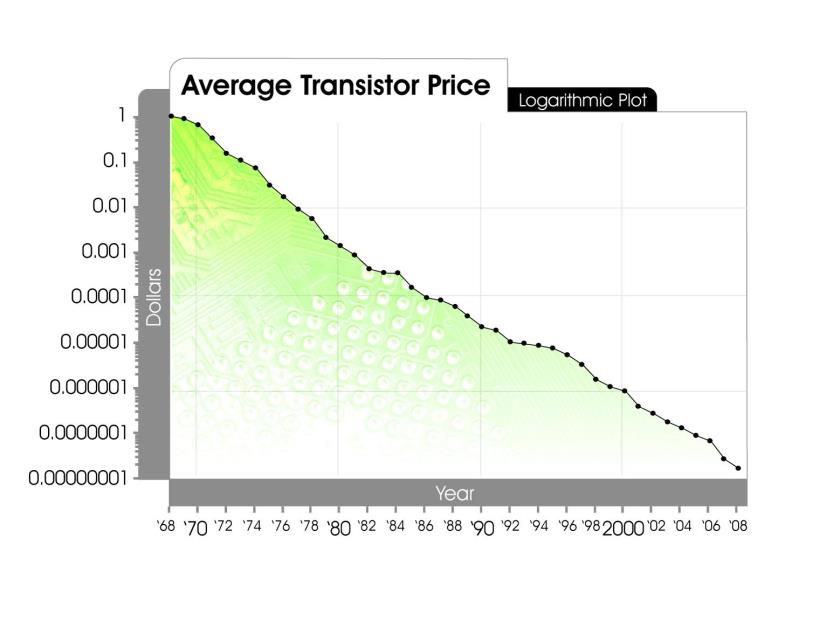

Transistors make up electronic computers; the PDP-1 used 2,700 of them. Extrapolating to 2010 prices in the graph, those would cost $0.000027. And this downward trend continues unabated to this day.

Lastly, what about those mechanical pieces? We live in the material world, so understanding materials and their characteristics, sensing of all types, and actuation (movement) of mechanical pieces are all things mechatronic systems require. And so the Third Rule of System Decomposition is to use the minimal amount of mechanism in any design, use the minimal number of parts, even use existing parts, if possible. (Example: Ben’s use of existing parts in the PDP-1 example). Of course, it is impossible to eliminate all mechanical parts, especially for those products that must perform an action, like a robot versus a computer. However, it is generally best to actuate electrically, control electronically, and use microcontrollers to handle the vast amount of product complexity.

MANAGING COMPLEXITY

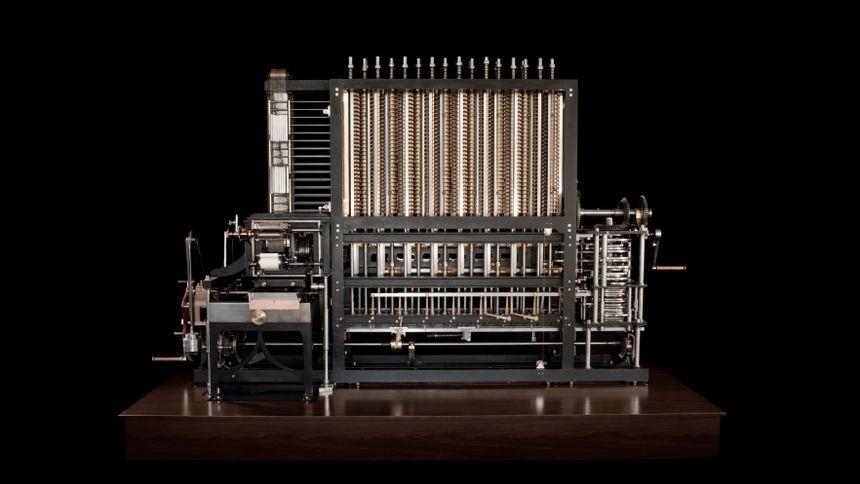

While every product has a certain amount of inherent complexity, by using these rules you can architect your product to keep unintended additional complexity from creeping in, and you can distribute the complexity according to the technology’s ability to successfully control and minimize it overall. As an example, let’s say you wanted to build a machine to calculate polynomials via the difference method, and you wanted the results to be printed out into a book-like format. If you were Charles Babbage, you’d invent this:

Pictured: A modern implementation of Babbage’s Differential Engine 2, all done the mechanical engineering way, with whirring gears, cams, levers, and some rotary input, as from a steam engine. Output is printed pages. Today, we would probably write some Excel macros and call it a day.

(Source: https://www.computerhistory.org/babbage/)

CONCLUSION

Optimal product development requires a clear statement of the goal and a solid architecture to provide a roadmap to achieving the objective. A conscious decision needs to be made to control one or two of the “what”, the “by when” and the “how much” of the product.

Additionally, there needs to be flexibility in the breakdown into mechanical, electrical, and software/firmware pieces to achieve your product goal as quickly and as cost-effectively as possible. The three rules of system decomposition are applicable to a wide variety of situations, for example when the division between firmware and hardware (electrical and mechanical) is made to minimize hardware costs or reduce product complexity.

DEC, led by the visionary engineer Ben Gurley, used these principles to create the first personal computer. The PDP-1 represented the best in the world that could be produced in technical computers at the time for the market it served. In studying the PDP-1, we have learned how breakthrough products are designed that meet time-to-market, budget, and performance constraints. With a little luck, these principles might also help you yield the first of a class of product3.

2 This funny number is because the pdp-1 had a 4K-word memory of 18-bit words. The concept of ‘byte’ had not been invented! If we take the total number of bits 73,728 and divide by 8 bits per byte, we get 9,216 bytes.

3 DEC later produced many advanced computers, and one of them, the PDP-11, was the base upon which UNIX was developed, and simultaneously birthed the C programming language, the oldest systems programming language still in common use.